VideoPoet, developed by Google Research, marks a significant advancement in video generation, particularly in creating expansive, captivating, and high-fidelity motions.

This tool transforms autoregressive language models into a top-tier video generator, incorporating components like the MAGVIT V2 video tokenizer and SoundStream audio tokenizer. These components convert images, videos, and audio clips of varying lengths into a sequence of discrete codes within a unified vocabulary.

These codes are aligned with text-based language models, facilitating integration with other modalities such as text. Within this tool, an autoregressive language model learns across video, image, audio, and text modalities to predict the next video or audio token in the sequence.

The tool incorporates multimodal generative learning objectives into its training framework, encompassing text-to-video, text-to-image, image-to-video, video frame continuation, video inpainting and outpainting, video stylization, and video-to-audio.

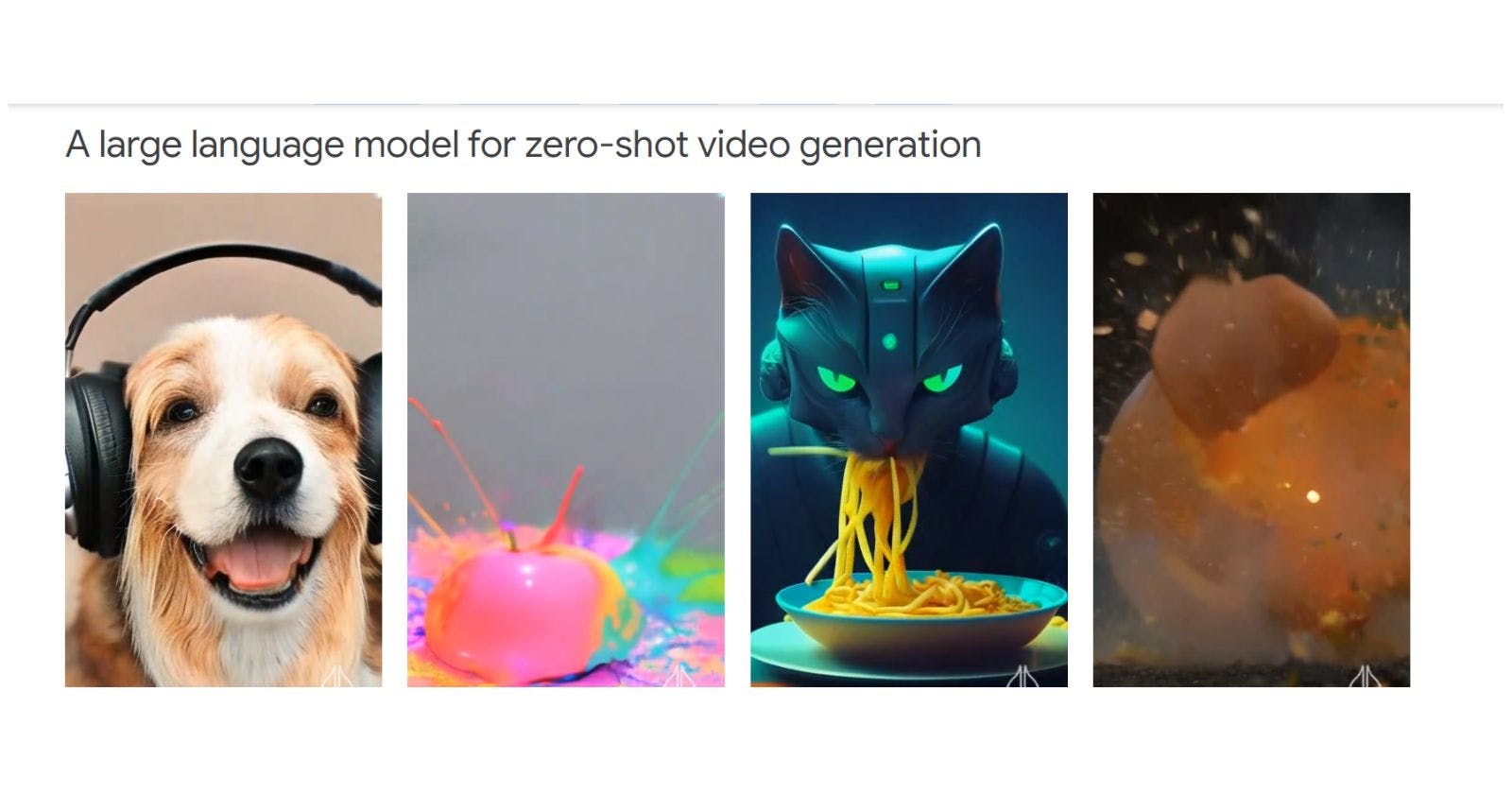

VideoPoet is versatile, generating videos in both square and portrait orientations to accommodate short-form content. Additionally, it supports the generation of audio from a video input.

With the ability to multitask on various video-centric inputs and outputs, VideoPoet demonstrates how language models can synthesize and edit videos with desirable temporal consistency.